Imagine visiting an online store that seems to know exactly what you’re looking for, offers suggestions tailored to your taste, and even remembers your favorite colors and styles from a past visit. Feels like magic? That’s the promise of personalization. But now imagine the site also knows what you said in a private chat or what you browsed in incognito mode. Suddenly, that magic begins to feel just a little… creepy.

In the age of AI, personalization is a double-edged sword. On one side, there’s the power to delight users with highly tailored experiences. On the other, there’s the risk of overstepping boundaries and making people feel surveilled. This tension begs for a new approach—one that leans on transparency and rules over opaque “black-box” AI systems. The answer lies in designing personalization systems that are not just intelligent, but also ethical, explainable, and user-centric.

Why Personalization Turns Creepy

Most users appreciate a certain level of personalization. It reduces friction, saves time, and often feels like a helpful assistant guiding us through the digital noise. However, personalization shifts into creepy territory when users don’t know:

- What data was collected

- How their data is being used

- Why certain content or recommendations are shown

This sense of ambiguity is often the result of using opaque AI systems that make decisions without any level of transparency. These are the so-called black-box models: systems that ingest tremendous quantities of data, form patterns, and deliver outputs, but without clearly interpretable logic.

When users can’t understand why a system behaves the way it does, trust erodes—and with it, the entire promise of personalization. It’s essential, therefore, to shift from “creepy but effective” to “respectful and still effective.”

The Problem with Black-Box AI

AI models, especially deep learning models, have grown exceptionally powerful. They can infer emotions, detect trends, and even predict life outcomes based on past behavior. But they’re also complex, often requiring significant expertise to interpret. Even their creators sometimes can’t explain why a given decision was made.

Here’s the trouble: if a user receives a product recommendation based on a black-box model and finds it intrusive or offensive, there’s no straightforward way to understand what triggered it—let alone fix it.

In contrast, rules-based systems—systems that operate based on clear, auditable logic—offer more control, accountability, and predictability. While they might not be as “smart” in the AI sense, they come with a huge benefit: users and regulators can understand how they work.

Rules Over Black Box: A Better Path to Personalization

So how do we move toward personalization that’s effective without being intrusive? One promising strategy is blending the strengths of both AI and rules-based logic. Here’s how:

- Use rules for boundaries. Set hard limits around what data can be used and what can never be collected—such as sensitive topics, private conversations, or off-platform activity. These boundaries ensure personalization respects user privacy from the start.

- Use AI within the fence. Inside those rules, AI still has an important role. It can analyze preferred themes, browsing patterns, or shopping habits, but only if those behaviors are explicitly captured with awareness and consent.

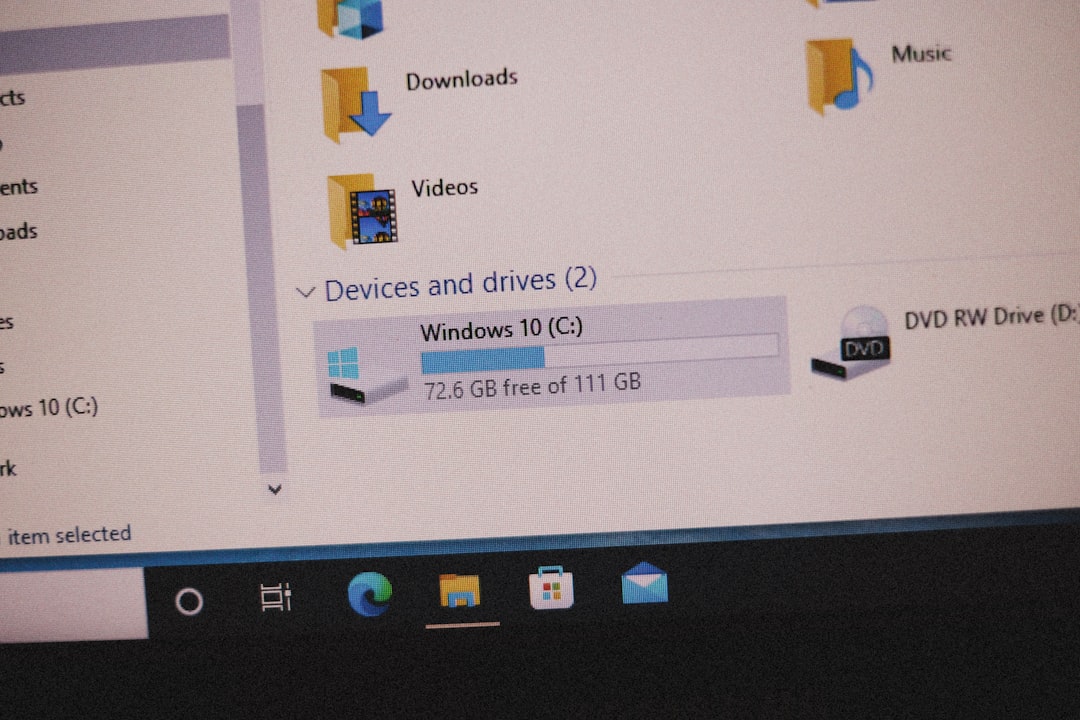

- Offer transparency dashboards. Show users what data is being used, how recommendations are being formed, and give them the option to turn features off.

- Introduce explainability tools. If an AI is making a recommendation, give a short explanation alongside—like “This playlist is based on your likes from last week’s jazz selections.” Such feedback reassures users and demystifies the process.

Together, these ideas form a powerful model for personalization that doesn’t need to compromise user trust.

Giving Users a Voice

One of the most critical, and often overlooked, aspects of personalization is giving users agency. Personalization should never feel inevitable or inescapable. Features that allow users to adjust, correct, or opt-out of certain personalization streams empower them and foster a healthier relationship between humans and systems.

Leading platforms now include:

- “Why am I seeing this?” buttons next to content

- Privacy settings dashboards that clearly outline what’s being tracked

- Feedback mechanisms that allow users to flag inappropriate personalization

These aren’t just nice-to-have features—they are essential for keeping personalization ethical and user-aligned.

Benefits of Rule-Based Personalization

Moving to a rules-first model of AI doesn’t just reduce creepiness—it introduces many downstream benefits:

- Improved trust: When people understand and influence the system, they’re more likely to engage with it.

- Regulatory compliance: Clear rules make it easier for companies to comply with GDPR, CCPA, and emerging AI accountability laws.

- Reduced risk of bias: Transparent rules are easier to audit for fairness, equity, and potential discrimination.

- Sustainable innovation: By aligning closely with user values and expectations, companies can innovate responsibly without backlash.

Are There Trade-Offs?

Some may argue that handcuffing AI with pre-defined rules limits its potential. It’s true: you may lose some of the hyper-nuanced inferences that deep-learning models can provide. But the question we need to ask is:

“Is gaining a small lift in conversion or engagement worth losing user trust—and perhaps future loyalty?”

In the long run, respectful personalization proves more valuable. Ethical systems aren’t just morally superior; they are often more business-sustainable. Users who feel seen and respected are far more likely to stick around.

The Future: Human-Centered AI Design

As AI gets smarter, our standards must rise with it. Intelligence without empathy is not an asset—it’s a risk. Building personalization systems that prioritize user dignity, understanding, and safety leads to more resilient consumer relationships.

The future lies in human-centered AI design. This means making systems that work for us, not ones that silently farm our data to optimize corporate outcomes without consent.

The challenge is significant, but surmountable. By favoring rules over opaque algorithms, we not only preserve trust—we enhance it.

Conclusion

Personalization can be a superpower of the digital age—or a surveillance nightmare. The determining factor is how much transparency, control, and ethics we bake into its foundation. Black-box AI may offer short-term gains, but rules-based personalization promises long-term trust and success. It’s time to start designing personalization experiences that users don’t just tolerate—but genuinely love.

Let’s make personalization brilliant—not bizarre.